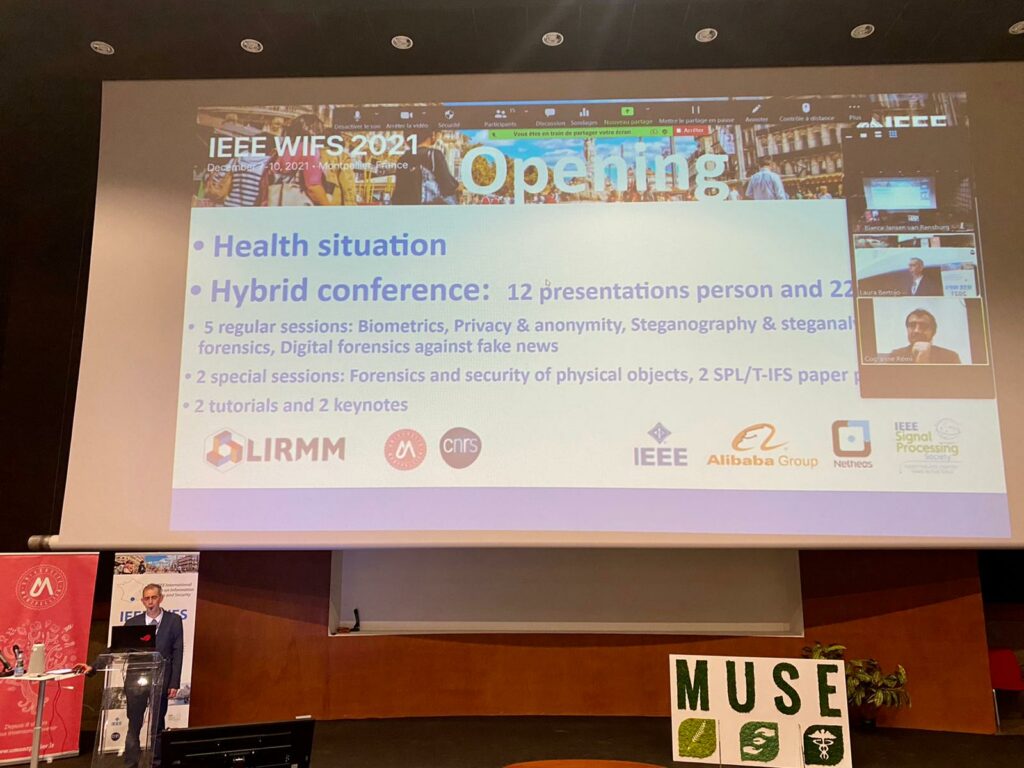

13th IEEE International Workshop on Information Forensics and Security

December 7 – 10, 2021, Montpellier, France

WIFS is the primary annual event organized by the IEEE Information Forensics and Security (IFS) Technical Committee of the IEEE Signal Processing Society. It aims to bring together researchers from relevant disciplines to discuss challenges, exchange ideas and share the state-of-the-art results and technical expertise in the ares of information security and forensics.

Due to the global pandemic of COVID-19, IEEE WIFS 2021 will be held as a hybrid conference. In other words, participants from Europe are asked to attend the conference in person (but it is not mandatory), and those from other countries/regions who cannot physically attend the conference can attend virtually.

We expect to be 30 participants (except for the tutorials) in a conference room of more than 150 seats. The doors will remain open. The mask will be mandatory as in all the buildings of our university (except for the coffee breaks).

The Health Pass is mandatory to enter in the conference (and also for the lunch, welcome reception, events, gala, restaurants, hotels, …).

You have to import your health pass into the French “antiCovid” system:

You have to check on the official website: https://www.diplomatie.gouv.fr/en/coming-to-france/coronavirus-advice-for-foreign-nationals-in-france/

This page from lonelyplanet : https://www.lonelyplanet.com/articles/france-covid-health-pass-for-tourists-extension

states that “If you’re traveling from the EU or any country signed up to the EU digital COVID cert program, you can present your digital COVID-19 certificate or any approved European health certificate that documents your vaccination or testing status. The French embassy in Germany confirms that if the certificate issued to you appears with a European flag, your certificate is compatible and will be recognized in France in the same way as French certificates.”

Do not hesitate to send a message to contact-wifs2021@lirmm.fr if you have any further questions.

News

2021/12/10 – Congratulations to the two winners of the awards: Mathias Ibsen (Hochschule Darmstadt) and Dongjie Chen (University of California, Davis). More details are available here.

2021/12/05 – The second tutorial and the two keynotes will be broadcasted on Youtube! Watch them at this link.

2021/11/22 – Presentation instructions are now available. More details can be found here.

2021/11/16 – 5 articles have been selected for the awards for the best paper and the best student paper! The proclamation of the results will be on Friday, December 10, at 4:50 PM (UTC+1). The list can be found here.

2021/11/02 – The list of 8 SPL/T-IFS papers accepted for oral presentation has been published and included in the program.

2021/10/09 – The deadline for presenting SPL/T-IFS papers accepted over the past 12 months has been postponed to October 20, 2021. If interested, please send an email to contact-wifs2021@lirmm.fr with the title, the authors and the DOI of your paper.

2021/10/09 – The second tutorial has been announced!

Title: Physiological forensics

Authors: Min Wu, University of Maryland – College Park (US) and Chau-Wai Wong, North Carolina State University (US)

More details are available here.

2021/10/09 – Great honor to announce two new sponsors: Alibaba Group and Netheos.

2021/09/24 – The second keynote has been announced!

Title: Multimedia data recovery and its related workflows in digital forensics

Author: Patrick De Smet, Belgian National Institute of Criminalistics and Criminology, Brussel, Belgium

More details are available here.

2021/09/23 – The list of accepted papers is available here.

2021/09/22 – The first keynote has been announced!

Title: Is Machine Learning Security IFS-business as usual?

Author: Teddy Furon, INRIA, Rennes, France

More details are available here.

2021/09/09 – The first tutorial has been announced!

Title: Data detectives – identifying data lean applications/services and methods of self defense

Authors: Robert Altschaffel and Stefan Kiltz, University of Magdeburg, Germany

More details are available here.

2021/09/06 – The rebuttal phase has started. This means that the authors can access reviewers comments. By the end of the week, the main concerns that have been raised by the reviewers can be answered by the authors.

2021/09/06 – Due to the global pandemic of COVID-19, IEEE WIFS 2021 will be held as a hybrid conference. In other words, participants from Europe are asked to attend the conference in person, and those from other countries/regions who cannot physically attend the conference can attend virtually.

2021/07/13 – We have decided to extend the deadline for regular and SS paper submission to July 21, 2021 (and a few more days to upload the final versions of the submitted papers).

2021/06/09 – The submission platform is now open! More details are available here.

2021/06/01 – Great honor to announce our first three sponsors: IEEE, IEEE SPS and Université de Montpellier.

2021/06/01 – Information to financial supporters is now available in an updated version of the call for papers. More details are available here.

2021/04/26 – The second and third Special Sessions on Security for Healthcare Applications and 3D Security have been announced. More details are available here.

2021/04/20 – The first Special Session on Forensics and Security of Physical Objects has been announced. More details are available here.

2021/03/18 – The call for papers can be downloaded here (PDF format): WIFS2021_CFP.